Executive Summary

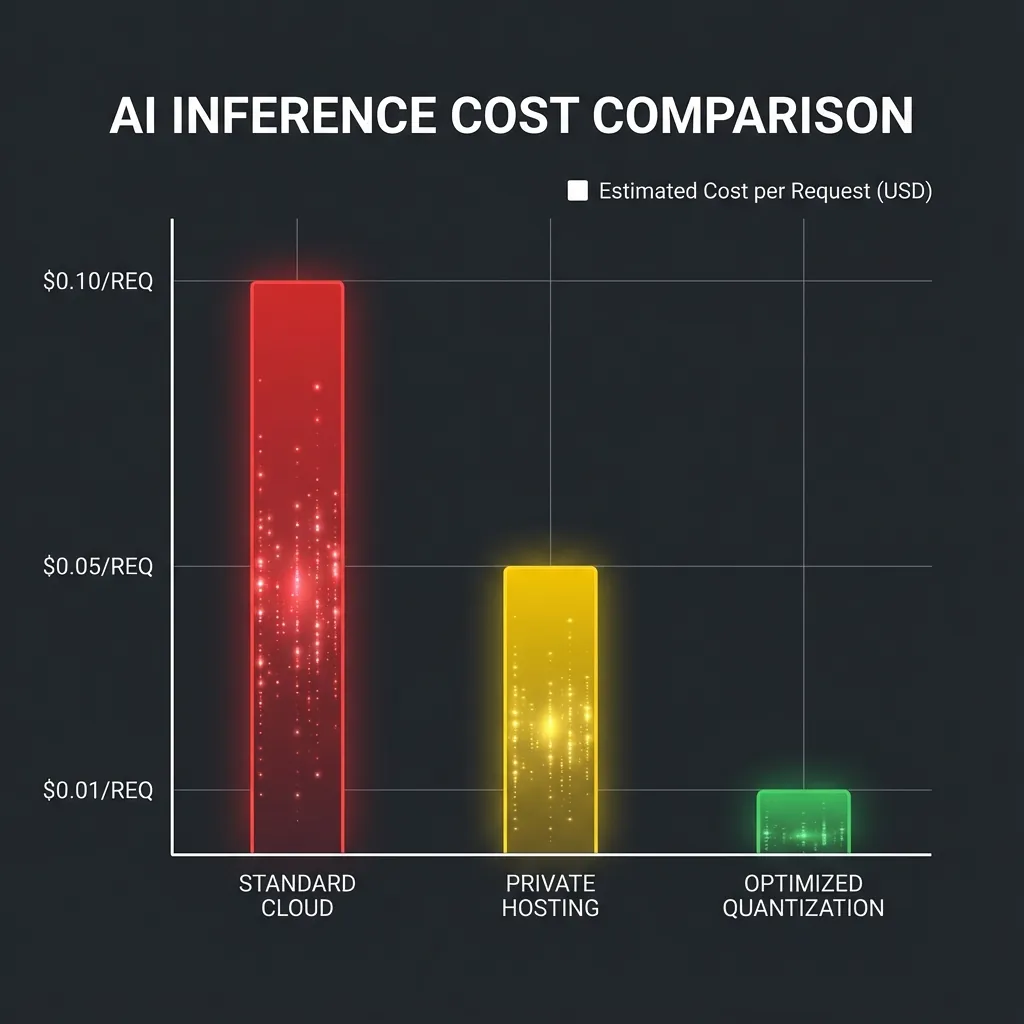

- The Problem: As models grow (70B+ parameters), memory bandwidth bottlenecks drive inference costs exponentially higher, often making private hosting cost-prohibitive compared to generic APIs.

- The Solution: "Inference Engineering"—specifically 4-bit quantization and speculative decoding—can reduce hardware CAPEX by 80% and OPEX by 60%.

- The Outcome: Optimized architectures allow enterprises to run sovereignty-compliant, on-premise models at a lower cost-per-token than public providers.

There is a dangerous phase in every generative AI project: the transition from Proof of Concept (PoC) to Production. In the PoC phase, cost doesn't matter. You use the most powerful model, you ignore latency, and you pay per token. But when you scale to thousands of users, the unit economics suddenly break. You are burning cash faster than you are generating value.

For Technical Managers, understanding the levers of Inference Economics is as critical as understanding the algorithms themselves. It is the difference between a science project and a viable business.

1. The Memory Wall: Why VRAM is the New Gold

Why is inference expensive? It’s rarely about compute (FLOPs); it’s about memory bandwidth.

Large Language Models are massive. To generate a single token, the GPU must move the entire weight of the model through its memory chips. For a 70B parameter model in standard FP16 precision, you need roughly 140GB of VRAM just to load the model.

This forces you into the realm of Data Center GPUs (NVIDIA A100s or H100s), which cost thousands of dollars per month per card. If you are serving internal employee tools, this CAPEX can kill the ROI of the project immediately.

2. Solution A: Aggressive Quantization

The first lever we pull is Quantization. This is the process of reducing the numerical precision of the model's weights.

Research has shown that LLMs are remarkably resilient to "noise." We can compress the weights from 16-bit floating point numbers to 4-bit integers with negligible loss in reasoning capability (typically < 1% increase in perplexity).

The Business Impact:

- FP16 Model (70B): Requires ~140GB VRAM → Needs 2x A100 (80GB). Cost: High.

- 4-Bit Model (70B): Requires ~35-40GB VRAM → Fits on 2x Consumer RTX 4090s (24GB). Cost: ~10x Lower.

By shifting from Data Center hardware to high-end Consumer hardware (or cheaper cloud instances), we fundamentally alter the break-even point of the application.

3. Solution B: Speculative Decoding

Once we solve the memory capacity problem, we face the latency problem. Users expect instant answers.

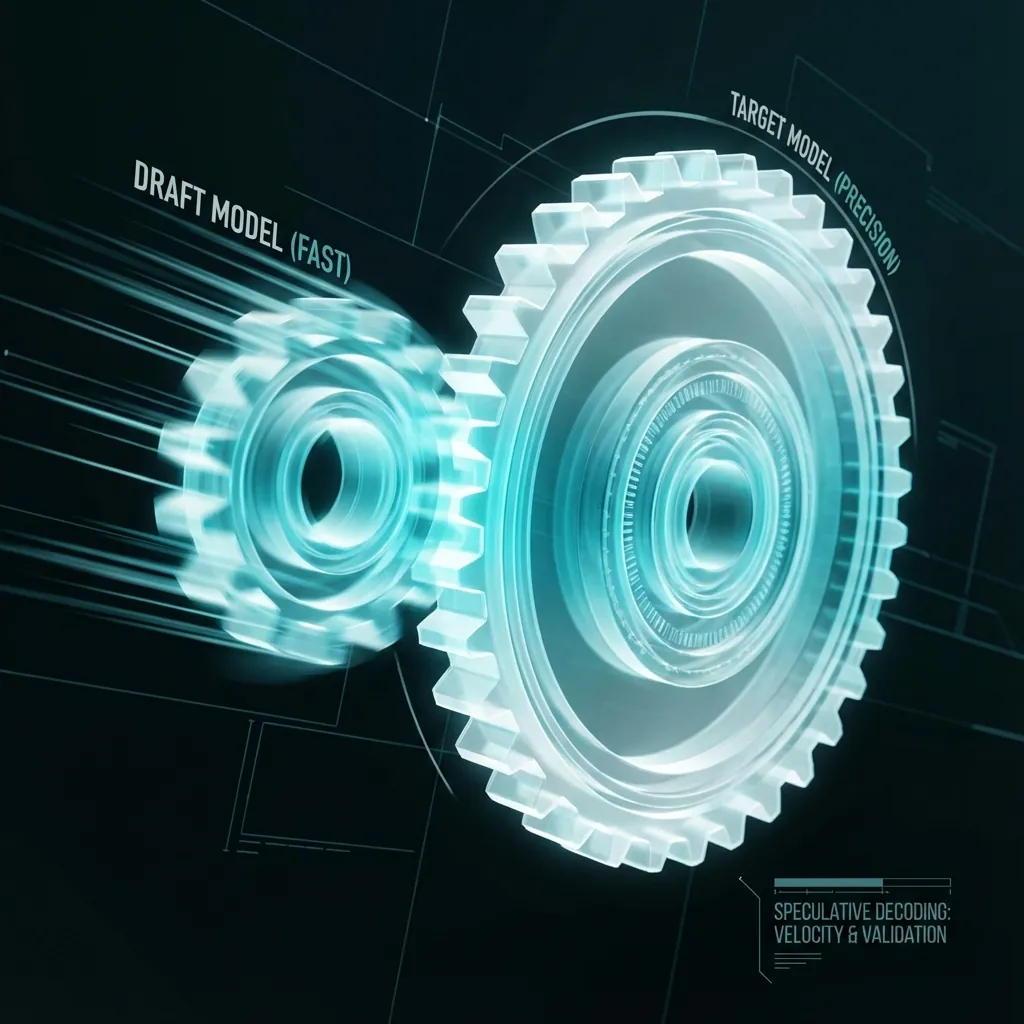

Speculative Decoding is a technique where we run two models simultaneously:

- The Drafter (Small & Fast): A tiny 7B model guesses the next 3-4 words incredibly fast.

- The Verifier (Big & Smart): The massive 70B model checks those guesses in a single parallel pass.

Because the "Verifier" can check 5 tokens as fast as it can generate 1, we get a 2x-3x speedup in throughput without sacrificing quality. If the Drafter guesses right, we get free speed. If it guesses wrong, we discard and regenerate with no penalty other than a few milliseconds.

Conclusion: Strategic Architecture

Building an AI product isn't just about prompt engineering. It's about designing a serving architecture that aligns with business realities.

Technical managers must ask their engineering teams not just "Can we build this?", but "What is the cost per token?" and "Where are we on the quantization curve?" The answers to these questions will determine if your AI initiative scales or stalls.

Need to optimize your model serving infrastructure? Audit your architecture with us.