Executive Summary

- The Paradigm Shift: We are transitioning from "Copilots" (human-in-the-loop) to "Agents" (human-on-the-loop), enabling asynchronous execution of complex business logic.

- The Value Prop: Early enterprise adopters are reducing manual administrative overhead by 40-60% by deploying agents for tasks like invoice reconciliation, Tier-1 support, and log analysis.

- The Roadmap: Success requires a tiered approach—starting with read-only retrieval agents before graduating to autonomous transactional agents with write-access.

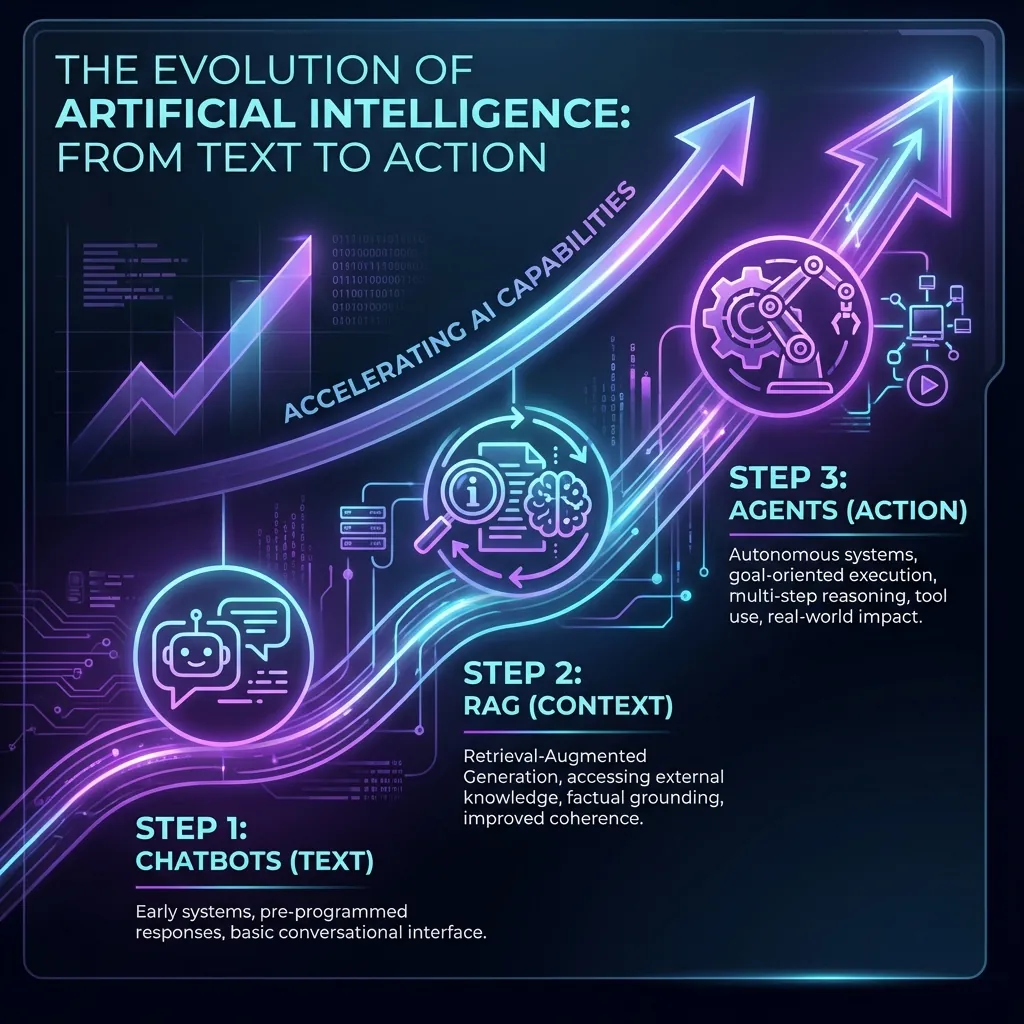

The first wave of Generative AI was defined by the chatbot. Whether it was ChatGPT or a custom internal RAG (Retrieval Augmented Generation) tool, the interaction model was identical: a human types a prompt, and the machine generates text.

While valuable for drafting emails or summarizing documents, this "Chat" paradigm is fundamentally limited by human bandwidth. The human is still the orchestrator. If you want the AI to do 10 things, you have to prompt it 10 times.

We are now entering the Agentic Era. In this phase, the AI ceases to be a passive responder and becomes an active executor.

1. The Anatomy of an Agent

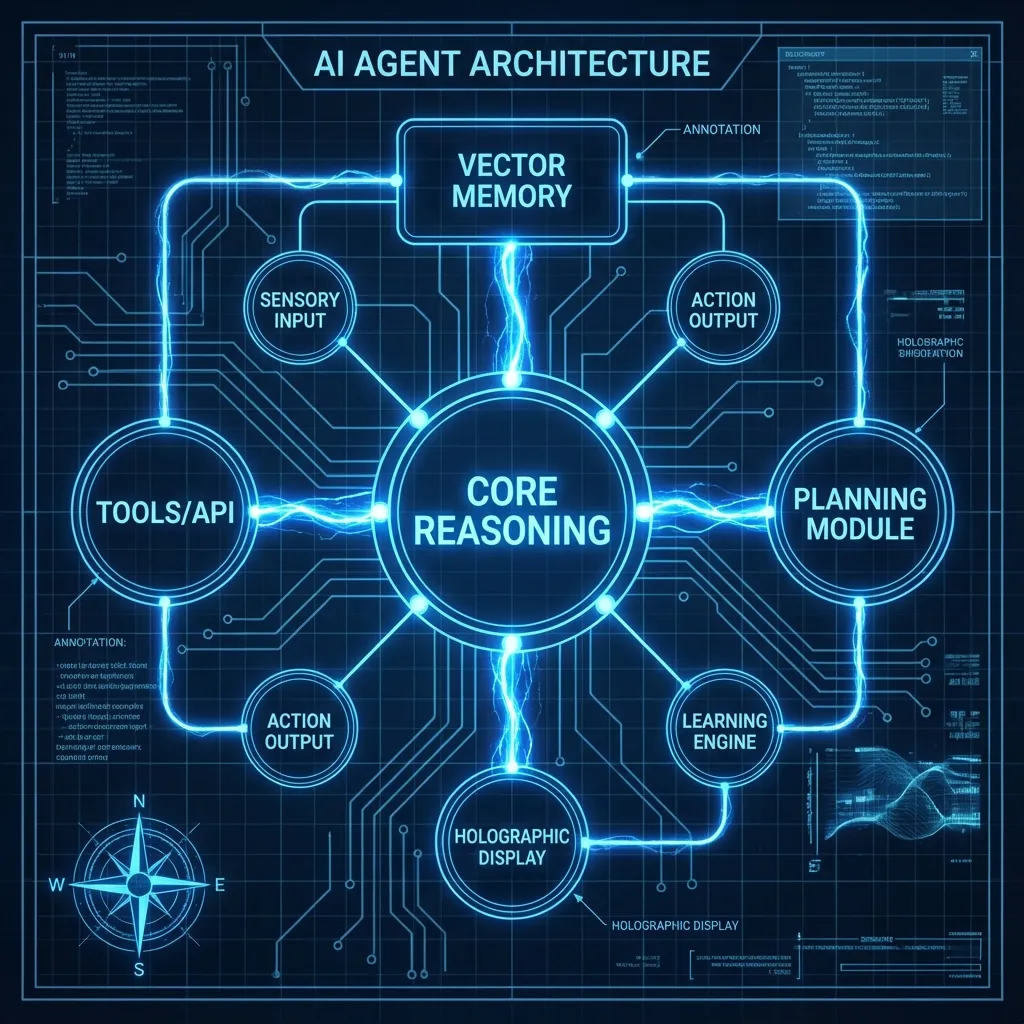

To understand the strategic implication, we must demystify the architecture. What makes an "Agent" different from a standard LLM application?

A standard LLM app is Input → Processing → Output. It is linear.

An Agentic system is circular. It operates in a loop of Perception, Reasoning, Action, and Observation.

- Perception/Memory: The agent retrieves context—previous interactions, user manuals, or database records.

- Reasoning Engine (LLM): The "brain" decides what to do next based on the context. It doesn't just predict the next token; it predicts the next function call.

- Tools & Actions: The agent has access to a toolkit—APIs, calculators, CRM hooks, or SQL clients. It executes a tool.

- Observation: The agent reads the output of the tool. Did the API call fail? Did the database return zero results? Based on this observation, it updates its plan.

2. Strategic Framework: The Agentic Maturity Model

For technical leaders, the risk is not in the technology itself, but in deploying it too loosely. We recommend a Three-Tier Maturity Model for rolling out agentic workflows.

Tier 1: The Research Agent (Read-Only)

Goal: Information Synthesis.

Risk Profile: Low.

Example: An automated "Market Analyst" that browses 50 competitor websites every morning,

summarizes price changes, and emails a PDF report to the Strategy team.

Why start here? Because the agent has no "write" access. It cannot delete data or crash a server. It builds trust in the reasoning capabilities of the model.

Tier 2: The Co-Pilot Agent (Human-in-the-Loop)

Goal: Drafting & Staging.

Risk Profile: Medium.

Example: A "Customer Support Drafter." The agent reads an incoming ticket, looks up the

user's order history, checks the refund policy, and drafts a reply. It does not send it. A human

agent reviews and clicks "Send."

The Value: You reduce handling time by 80% while maintaining 100% human oversight.

Tier 3: The Autonomous Agent (Human-on-the-Loop)

Goal: Asynchronous Execution.

Risk Profile: High (requires strict guardrails).

Example: "Supply Chain Optimizer." The agent detects a shipment delay via API, autonomously

checks inventory at alternative warehouses, and re-allocates stock to fulfill high-priority orders—notifying

the manager only after the action is complete.

3. The Architecture of Governance

Moving to Tier 3 requires more than just better prompts; it requires a new governance architecture. At Artiportal, we implement Deterministic Guardrails.

These are code-level rules that run outside the LLM. For example, if a Financial Agent decides to transfer money, a hard-coded Python layer checks: "Is the amount < $5,000?" and "Is the destination IBAN on the whitelist?" If not, the action is blocked regardless of what the AI "thinks."

This "Sandbox" approach allows enterprises to safely deploy powerful agents. We treat the LLM as an untrusted reasoning engine, while the surrounding infrastructure ensures compliance and security.

Conclusion

The transition to agentic workflows is the defining technical challenge of the next 3 years. It moves AI from being a tool for individual productivity to a platform for organizational scale.

Is your infrastructure ready for autonomous agents? Request an architectural audit.